Groq®

Hyperfast LLM running on custom built GPUs

Featured

213 Votes

Description

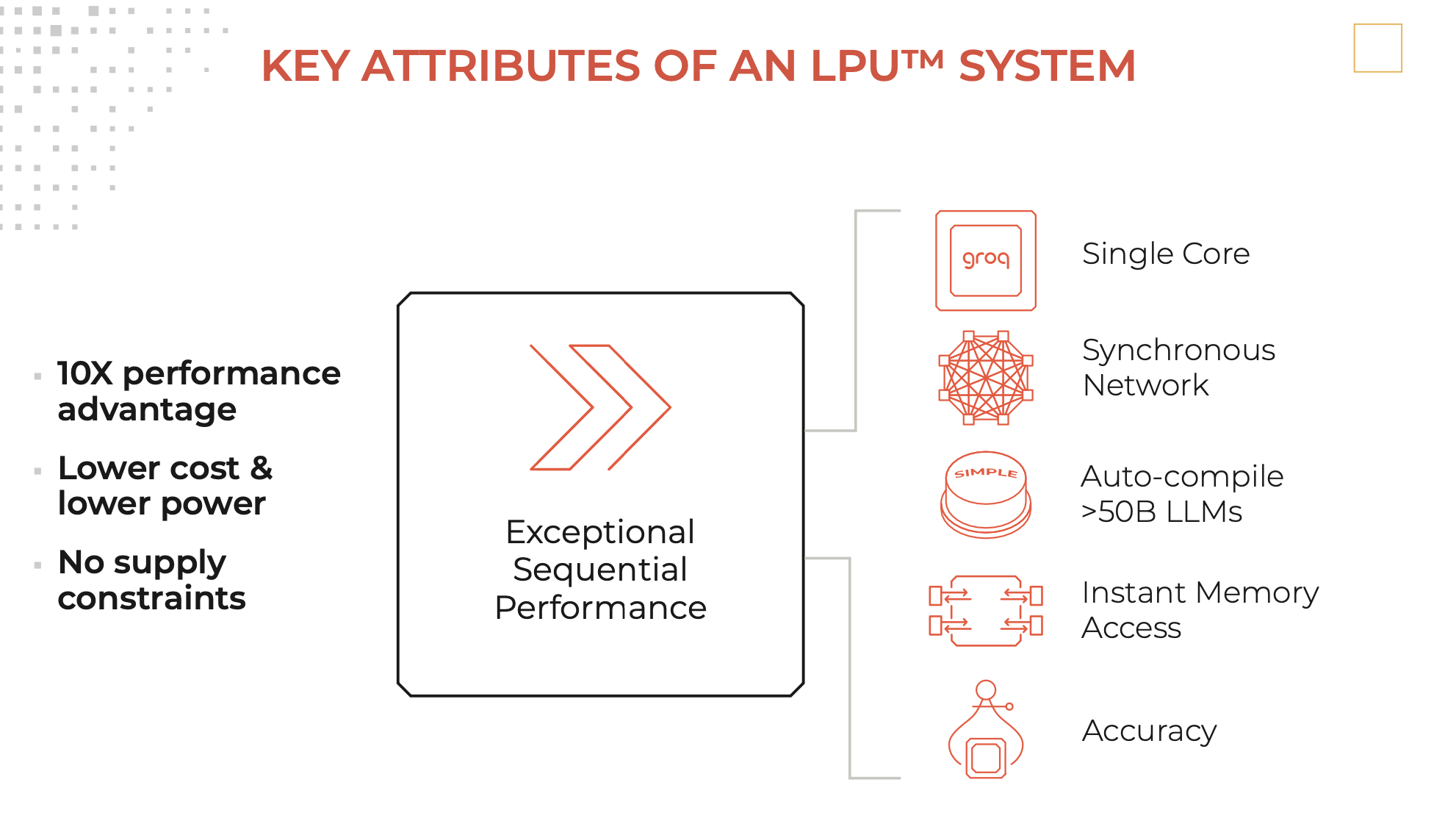

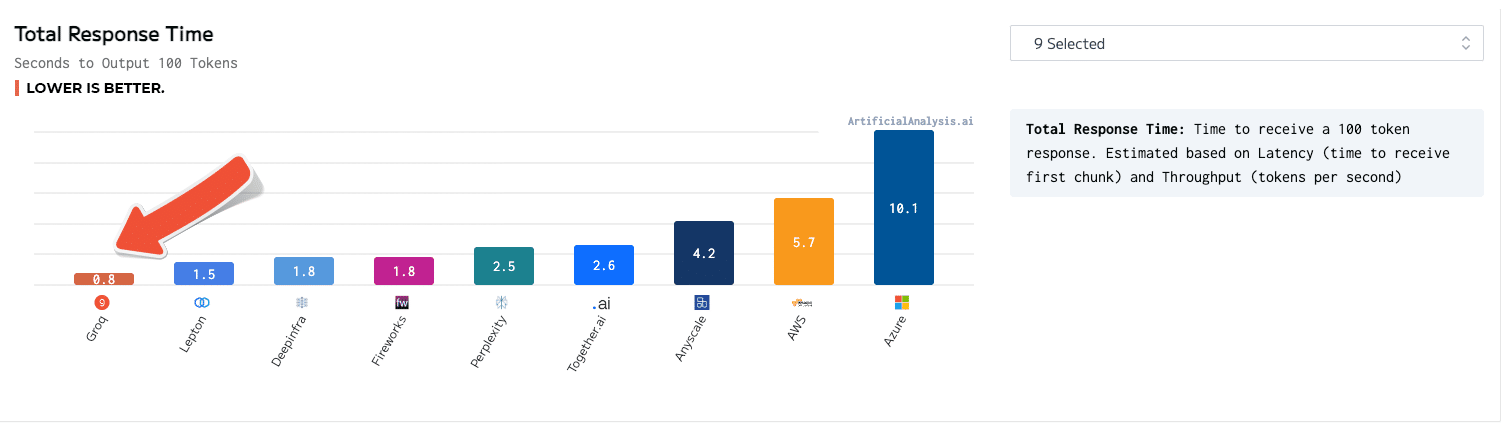

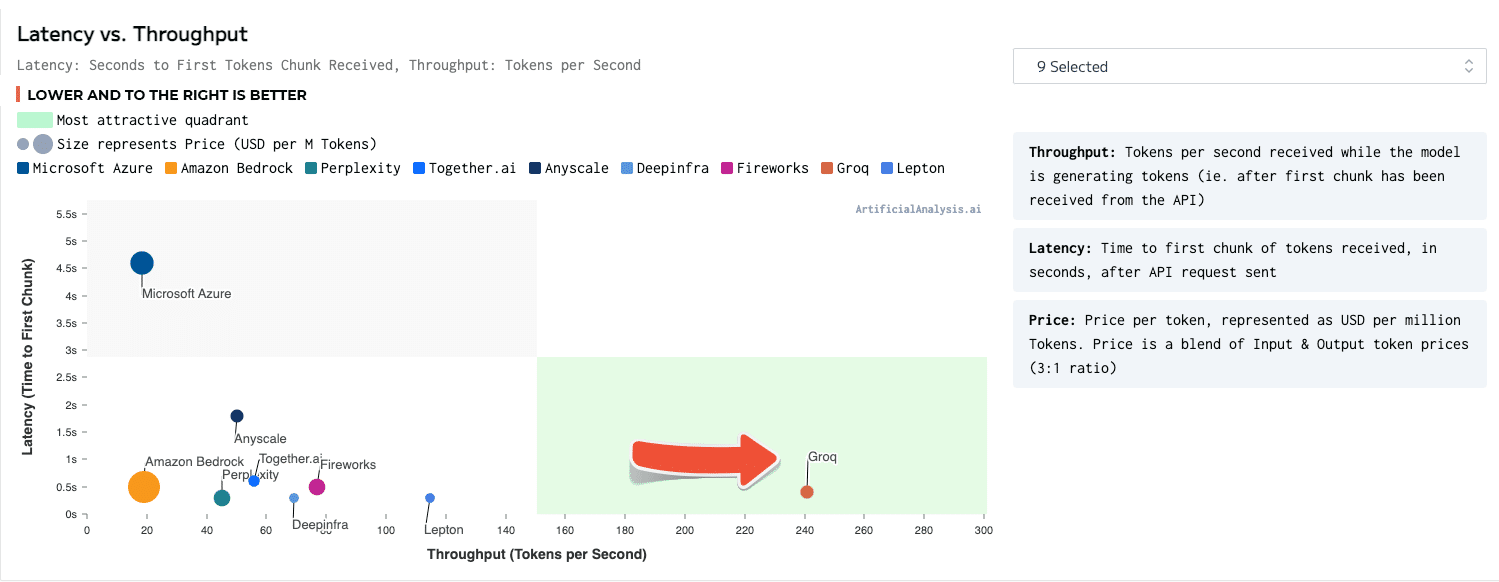

An LPU Inference Engine, with LPU standing for Language Processing Unit™, is a new type of end-to-end processing unit system that provides the fastest inference at ~500 tokens/second.