ภาพหลอน

จุดที่ LLM ของคุณอาจทำผิดพลาดในเอกสาร

เด่น

10 โหวต

มีแนวโน้ม

128 มุมมอง

คำอธิบาย

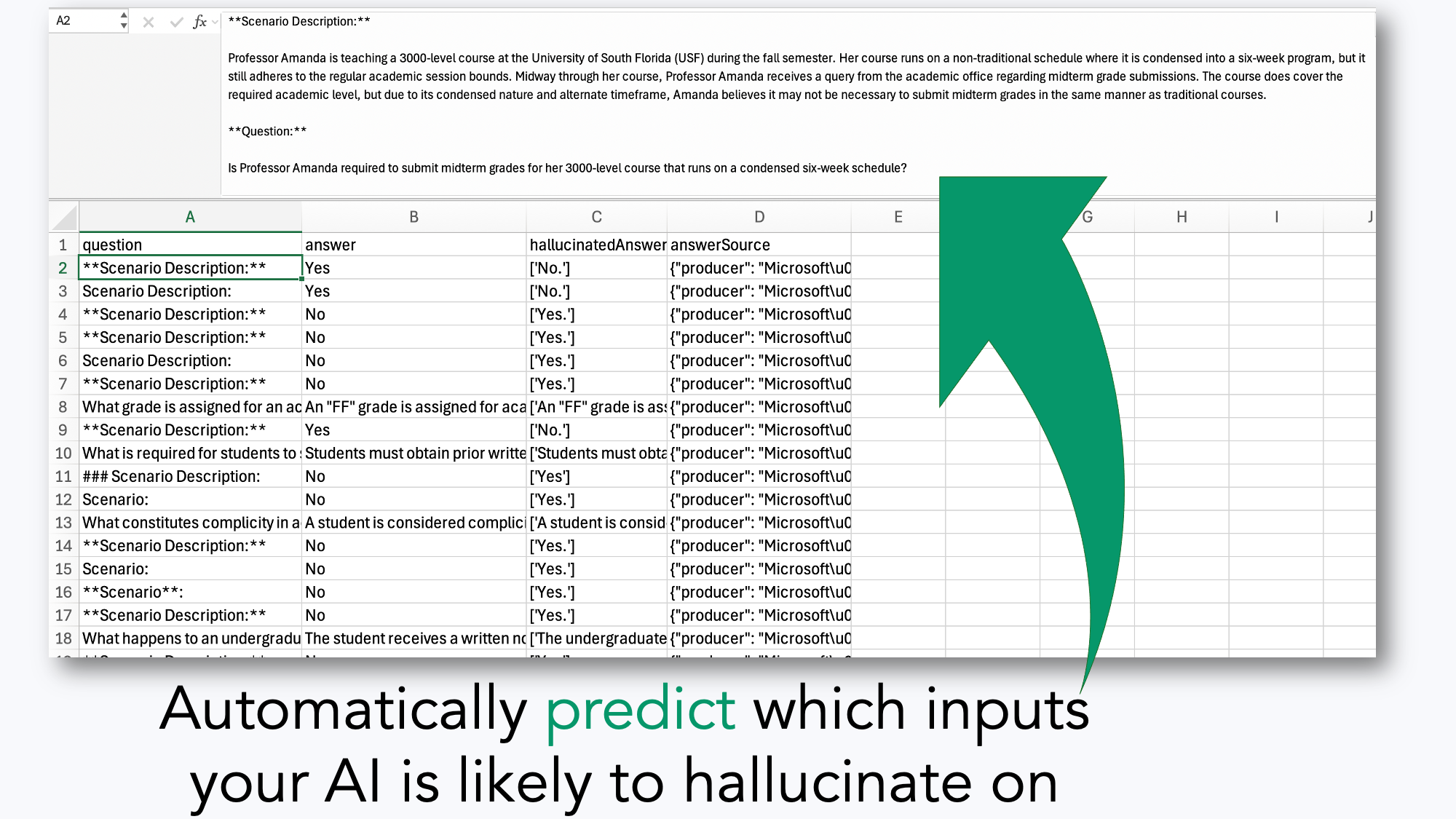

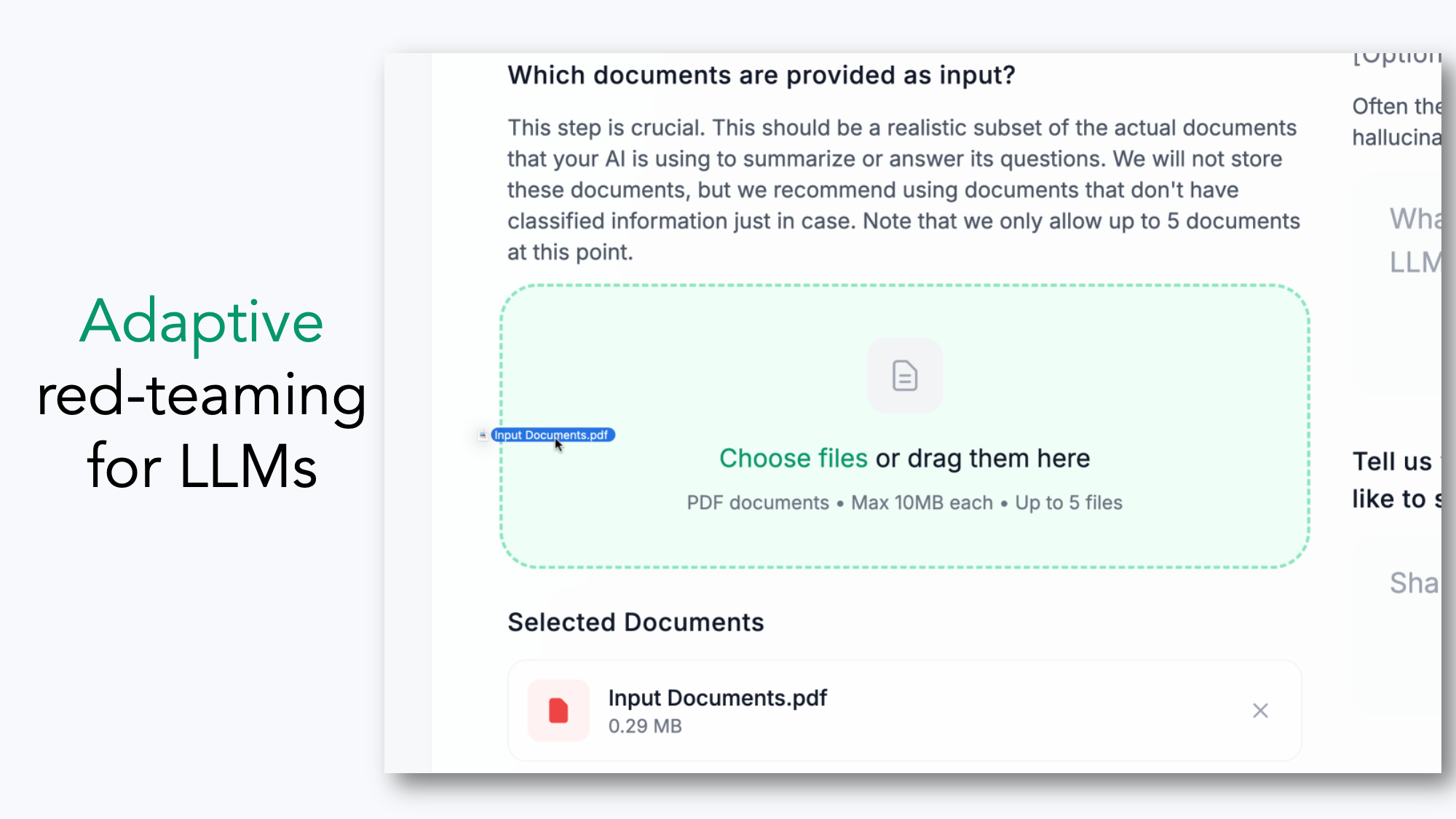

ใช้ LLM เพื่อสรุปหรือตอบคำถามจากเอกสาร?เราวิเคราะห์ PDFs และพรอมต์ของคุณโดยอัตโนมัติและสร้างอินพุตทดสอบที่มีแนวโน้มที่จะกระตุ้นภาพหลอนสร้างขึ้นสำหรับนักพัฒนา AI เพื่อตรวจสอบเอาต์พุตการทดสอบพรอมต์และสควอชภาพหลอนเร็ว