Patrei API that blocks prompt injection

Just stop jailbreaks & prompt hacks with one API call.

Featured

5 Votes

Description

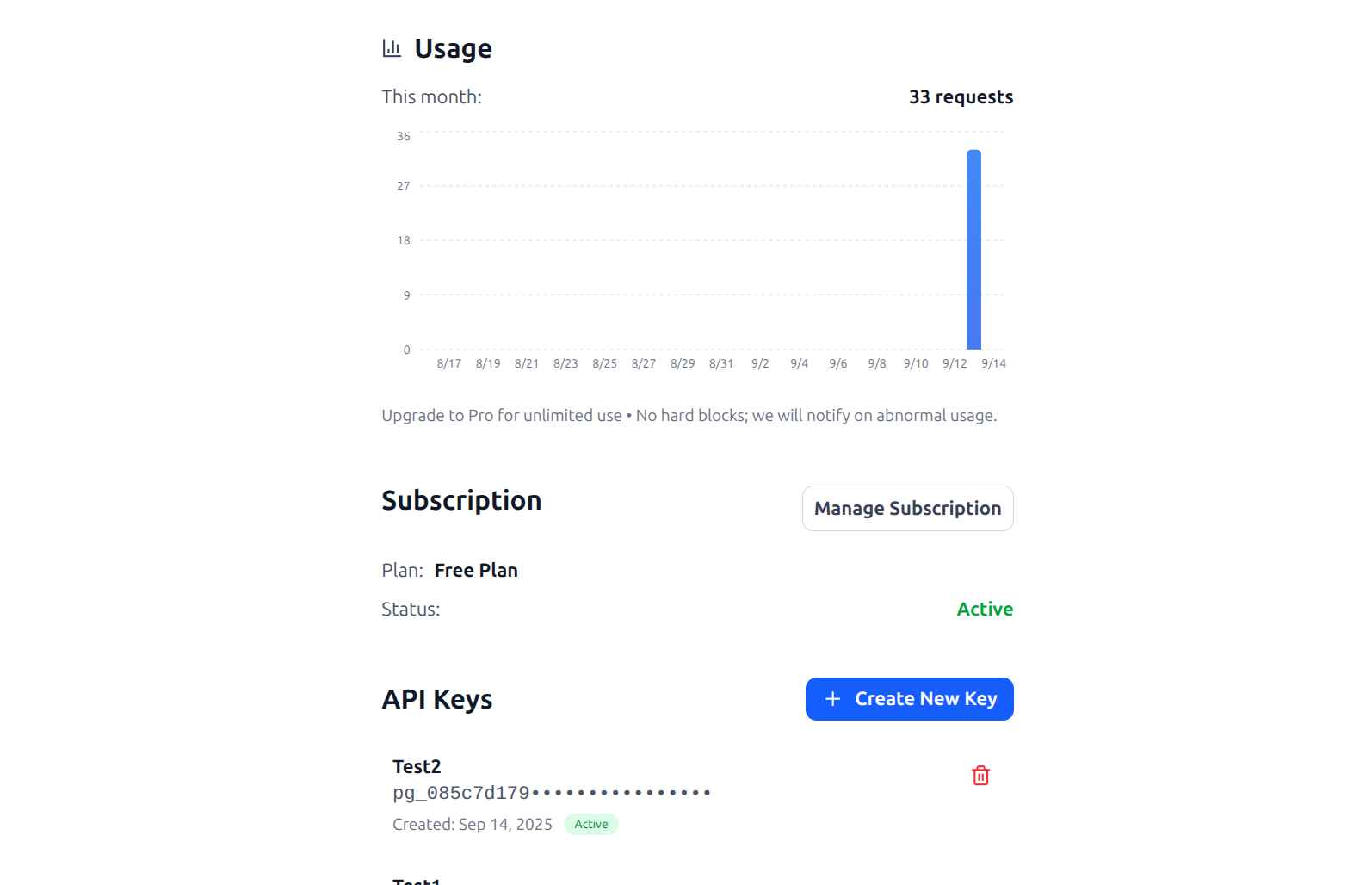

It's clear that LLMs are prone to prompt injection. My prompt injection scanner scans prompts for attacks before they reach your LLM models. What is returned is a score that indicates risk. Fast, cheap, and constantly updated based on your feedback.