LLaMA

A foundational, 65-billion-parameter large language model

Featured

120 Votes

Description

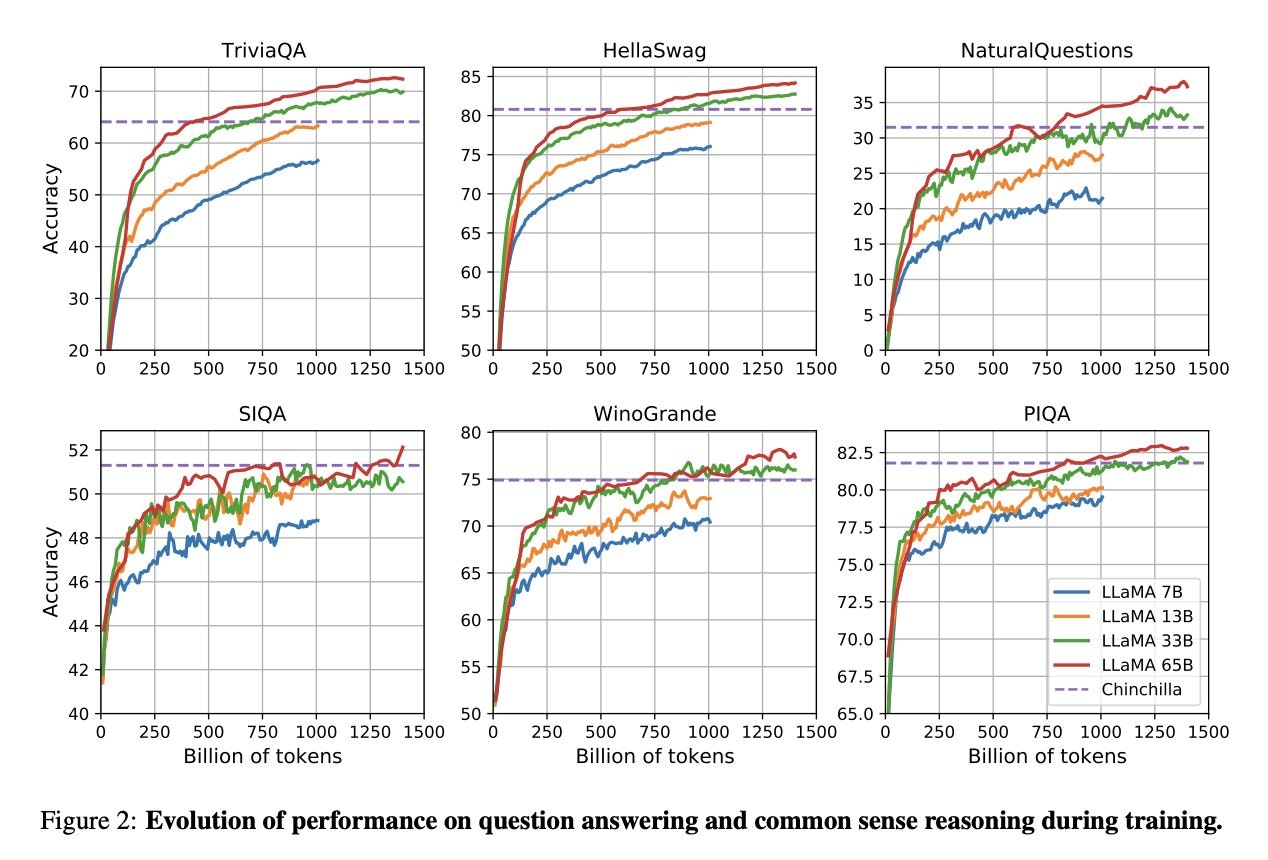

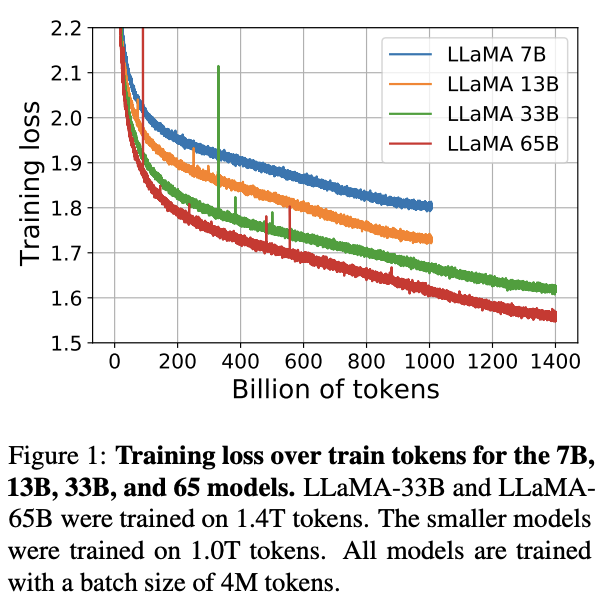

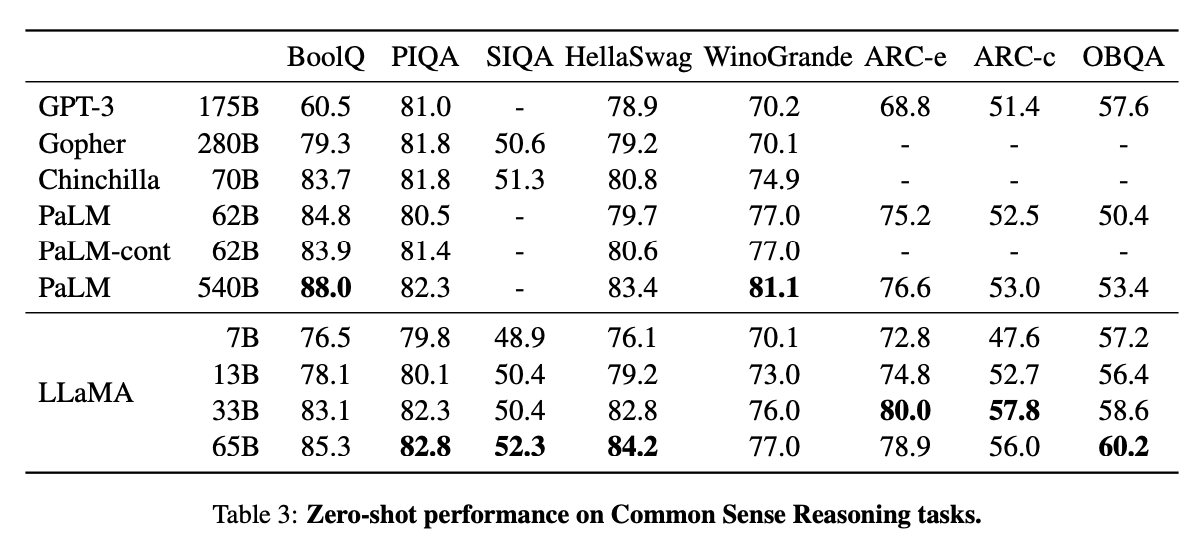

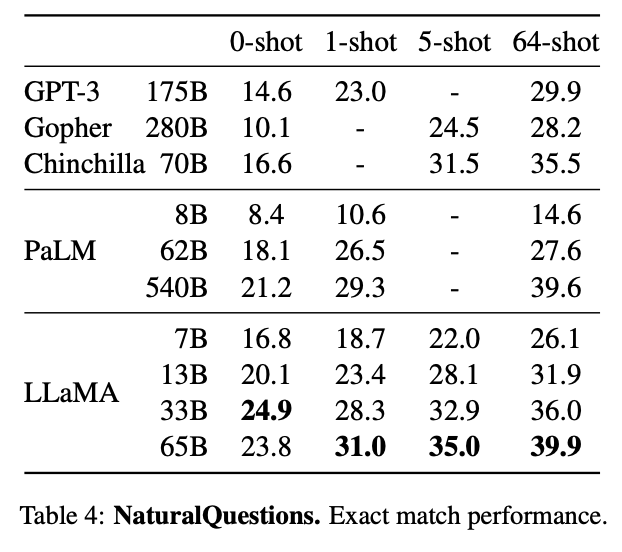

LLaMA is a collection of foundation language models ranging from 7B to 65B parameters and show that it is possible to train state-of-the-art models using publicly available datasets exclusively, without resorting to proprietary and inaccessible datasets.